How can we help?

Let’s talk about how we can help you transform your business.

Contact us

High-performance, high-availability file systems that can store big data and provide high data throughput are essential for many companies. However, it is challenging to compare the advantages and limitations of different file systems. In this blog post, we will provide a comparison between four high-performance, high-available file systems: CephFS, HDFS, GPFS, and CDFS (Comtrade Distributed FS, EOS based).

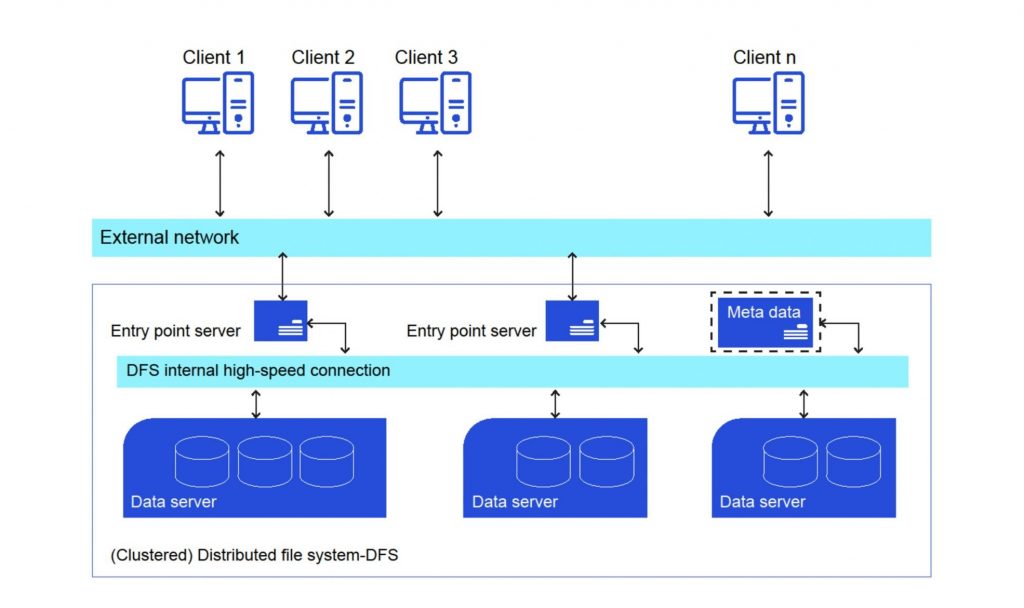

(Clustered) Distributed file system

To compare these file systems, Comtrade developed specific test scenarios in January 2023 that focused on high throughput, low latency, and expandability. The testing environment included a distributed file system as a single node, identical hardware components (motherboards, network adapters, and memory), and clusters on different HDDs with identical disk drives. The hardware components of the testing environment were as follows:

Client: Linux – 2 Cores, 4 GB Memory, 80 GB HDD

Client: Windows – 4 Cores, 10 GB Memory, 80 GB HDD

Servers: Linux – 8 Cores, 20 GB Memory, 500 GB HDD

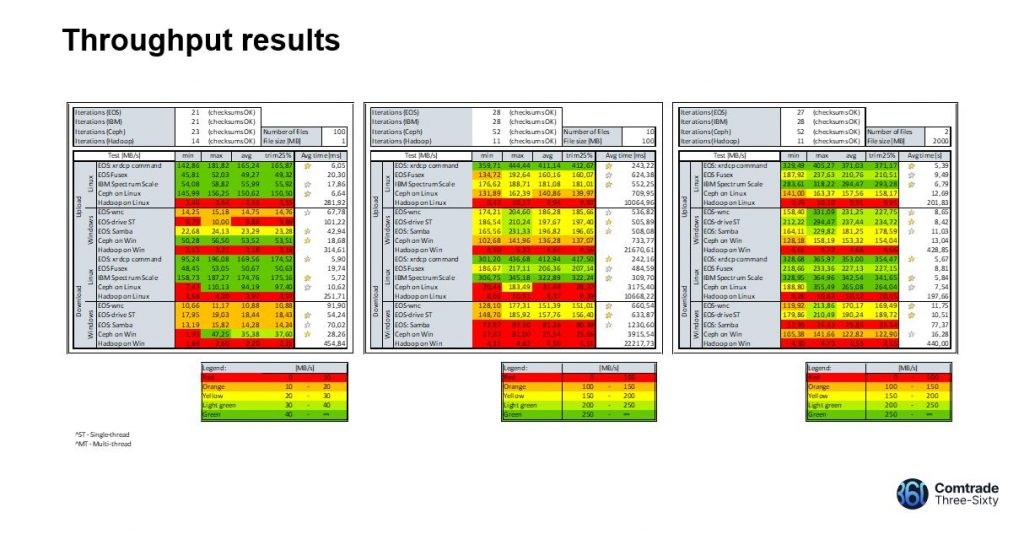

Three different file sizes were used to test each system’s performance: small files (1MB), medium files (100MB), and large files (2GB).

For each file size, two tests were conducted: download and upload. The tests involved creating new test files on the server or client machine, clearing file caches, transferring files between the server and client machines, verifying the MD5 hash, calculating the transfer speed, and removing the created and copied test files.

The fault tolerance of each file system was also tested. CephFS and HDFS used JBOD instead of RAID for fault tolerance, while GPFS used IBM Spectrum Scale RAID. CDFS, which is EOS base, used a variant of RAID, named RAIN (JBOD in the form of RAIN). Each file system also had unique features to ensure fault tolerance, such as snapshots, replication, and file and directory layouts.

Based on the test scenarios and results, GPFS and CDFS (Comtrade Distributed FS) were the best performers for small, medium, and large files on Linux, while HDFS and CephFS were not the best performers for any file size. It is important to note that the limitations of this comparison only covered performance, while other essential features such as software maintenance and upgradeability were not compared.

Throughput results

High availability metrics, such as MTBF (mean time between failures), TBW (terabytes written), failover resync time, and resync of replaced disk, should be compared in future testing. Additionally, high availability requirements such as load balancing, data scalability, geographical diversity, and backup to tape should be considered when selecting an appropriate high-performance, high-available file system.

Choosing the appropriate high-performance, high-available file system that fulfills given requirements is a challenging task for companies. The comparison between CephFS, HDFS, GPFS, and CDFS (Comtrade Distributed FS) highlights their advantages and limitations based on performance, fault tolerance, and testing scenarios. However, it is essential to consider other features such as software maintenance, upgradeability, high availability metrics, and requirements when making a final decision.

Detailed presentation on high-performance system testing is available here – LINK

Keywords: High-performance file systems, High-availability storage solutions, Big data storage, CephFS, HDFS, GPFS, CDFS, Comtrade360, Fault tolerance, Load balancing, Data scalability, Backup to tape, File system comparison, Comtrade, Data storage solutions, Data throughput, File system testing, Distributed file system.